🐝 Tjoskar's Blog

I write about stuff, mostly for myself

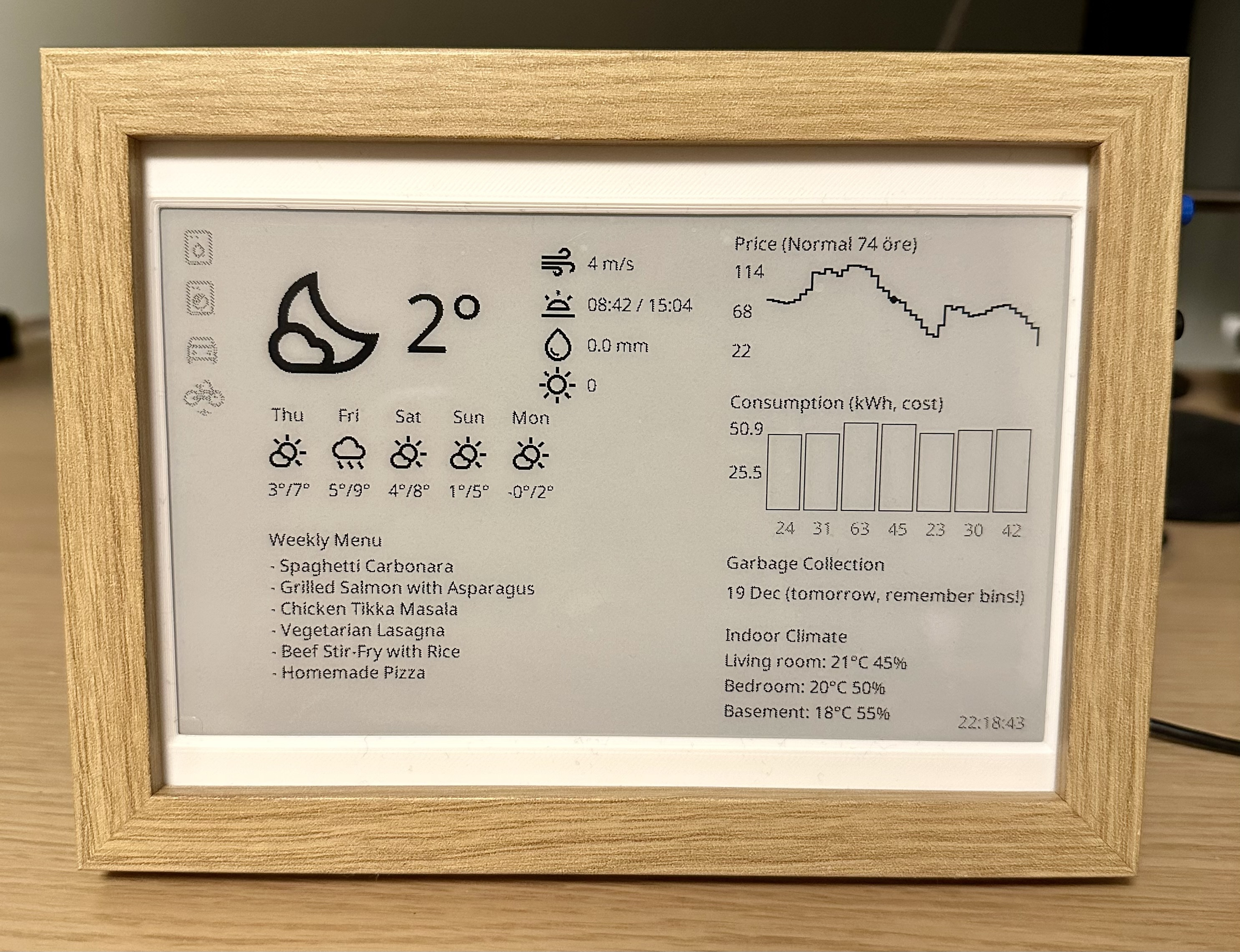

Use an E‑Ink display to show information about your house

Want to build this exact dashboard? While this post covers the technical overview, I have written a comprehensive book that guides you through every single step—from soldering the buttons to configuring the Linux services. It includes all the source code and saves you hours of trial and error.

What I’ve used for this project:

- A Pi Zero W 2

- Waveshare 7.5” e-Paper HAT

- A IKEA frame

- Two physical buttons

- One LED

- Some solder and a few wires

I got this idea soon after we moved into our house and set up Home Assistant. I loved the data HA provides, but I disliked needing my phone every time I wanted to glance at energy use or toggle something. I wanted ambient, glanceable information in the kitchen. I also prefer the feeling of physical buttons over tapping on a screen. I didn’t want a bright LCD, E‑Ink felt more appropriate. At first I considered a color E‑Ink panel, but I couldn’t find any with a fast enough refresh rate (everything I looked at took ~40 s to redraw the screen).

When I started this project, I searched around and found a few home dashboards that used E‑Ink displays. Most of them followed the same pattern: spin up a browser (often headless Chrome), load a web page, take a screenshot, then pipe that bitmap to the E‑Ink controller. Clever, but heavy. The workflow looks like this:

- Event: a light turns on

- Headless Chrome process spins up

- Chrome loads a page

- Screenshot captured

- Script forwards image to controller

The latency can be up to a minute due to startup overhead, and error handling becomes complex.

I opted to render directly with Pillow (PIL) on the Pi Zero W 2. It takes just a few milliseconds to compose the image, then the display’s own refresh time dominates (~1 s). Simpler, faster, and fewer components to babysit.

I briefly considered a Pi Pico, but 264 KB RAM felt tight for image buffers and font rendering. The Pi Zero it is.

Setup

I flashed Raspberry Pi OS, enabled the SPI interface, and installed the following packages: git python3-pip python3-pil python3-numpy python3-spidev

I adjusted the resistor to 0.47Ω for the HAT.

Then I created a file called hello-world.py with the following content (for example with vim: vi hello-world.py).

from driver import EPD

from PIL import Image, ImageDraw, ImageFont

epd = EPD()

epd.init()

epd.Clear()

image = Image.new('1', (epd.width, epd.height), 255)

draw = ImageDraw.Draw(image)

font = ImageFont.load_default()

draw.text((100, 100), "Hello World", font=font, fill=0)

epd.display(epd.getbuffer(image))

epd.sleep()Now, try it out:

python3 hello-world.pySet up the frame

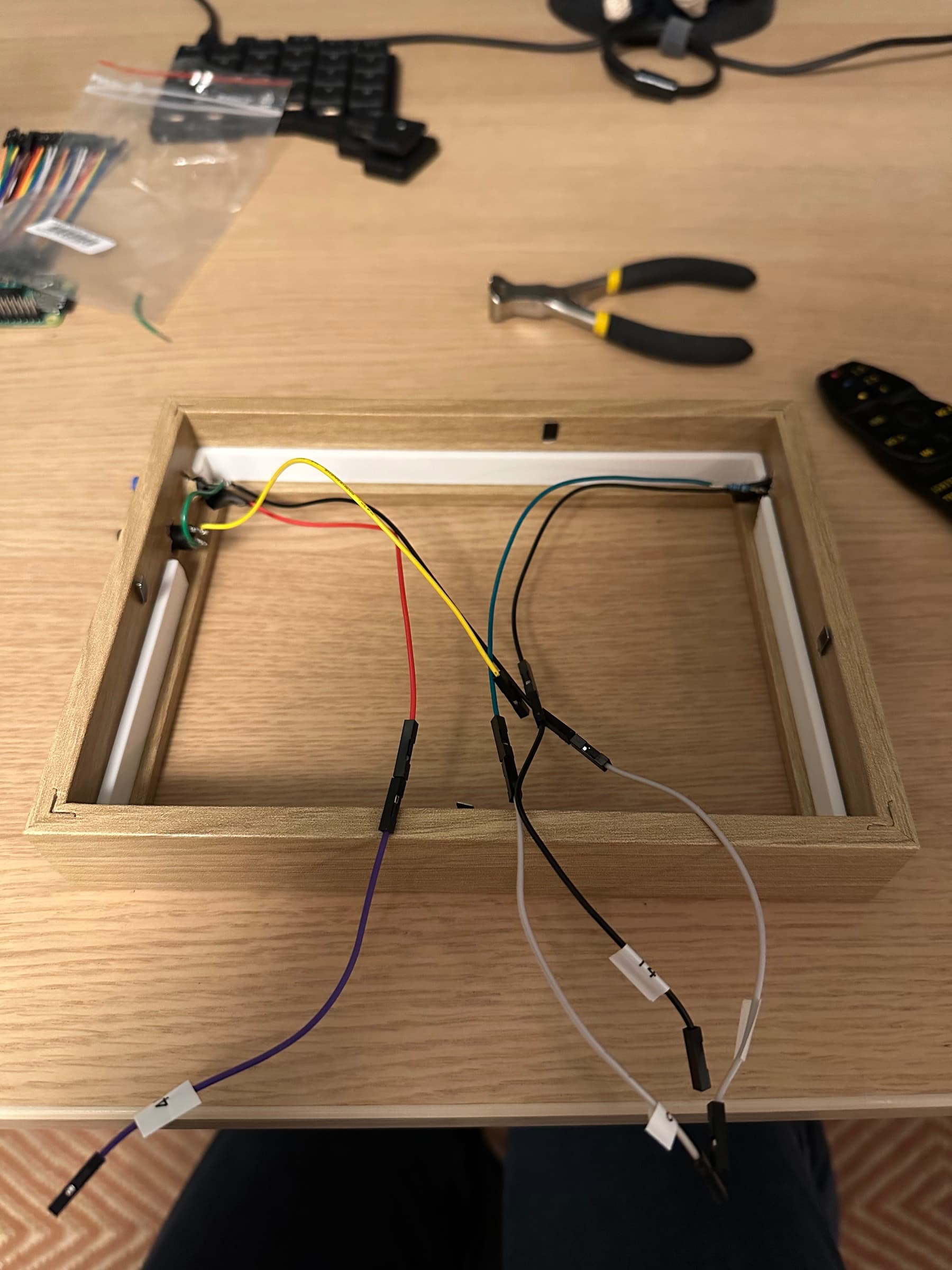

I used a picture frame from IKEA and cut the mat a bit so it would fit.

Then I drilled three holes: two for the buttons and one for an LED. I soldered everything together and then connected it all.

I connected the button to GPIO 21 and ground.

I connected the LED to GPIO 13 and ground (just remember to add a suitable resistor so you don’t burn the LED).

Example code to try it out:

from gpiozero import LED, Button

from signal import pause

LED_PIN = 13 # Connect the resistor/LED to GPIO 13

BUTTON_PIN = 21 # Connect the button to GPIO 21

led = LED(LED_PIN)

button = Button(BUTTON_PIN, pull_up=True, bounce_time=0.05)

def on_press():

led.on()

def on_release():

led.off()

button.when_pressed = on_press

button.when_released = on_release

pause()Set up the application

I use an MQTT client to receive and send events to Home Assistant. When a device I care about (engine heater, bike charger, washing machine, dryer) turns on or off, an event is published that the program listens for. On receipt, I trigger a “fast render” (~1 s refresh including display time).

def on_connect(client, userdata, flags, rc):

client.subscribe(topic)

def on_message(client, userdata, msg):

payload = msg.payload.decode("utf-8")

is_on = payload.lower() == "on"

updated_device = update_device_by_topic(msg.topic, is_on)

if updated_device:

panel_image = compose_panel()

fast_render(panel_image)

if __name__ == "__main__":

# Initial render

panel_image = compose_panel()

render(panel_image)

client = mqtt.Client()

client.username_pw_set(MQTT_USERNAME, MQTT_PASSWORD)

client.on_connect = on_connect

client.on_message = on_message

client.connect(MQTT_HOST, MQTT_PORT, 60)

client.loop_forever()

One button sends an MQTT event to HA to start the engine heater. This triggers a small dialog (“Starting the engine heater”) which auto‑dismisses after 5 s. When the heater is running (regardless of how it was started), the LED lights up. From across the room you can instantly tell the status. In summer I may repurpose it to show if the bike charger is active—or just invent a new excuse for a tiny glowing light.

I fetch price and consumption data directly from my electricity provider (I love that they have a public GraphQL API for this).

Weather data comes from https://api.openweathermap.org

I also show upcoming garbage collection dates (hardcoded dates).

We plan meals in the iPhone Notes app. I eventually built a Shortcut that posts the meal list.

To upload the menu from your phone, you need an endpoint accessible on the internet. You could host a simple web server on your Raspberry Pi, but that requires exposing it to the internet (or only uploading when connected to your home Wi‑Fi).

A simpler alternative is to use a cloud function. For example, you can create a small Deno script using Deno KV storage. Deno Deploy is a fantastic service and easy to use. Here’s a sample script:

const kv = await Deno.openKv();

Deno.serve(async (req) => {

if (req.method === "GET") {

const entry = await kv.get(["menu"]);

return new Response(JSON.stringify(entry.value || []), {

headers: {

"Content-Type": "application/json",

},

});

}

const body = await req.json();

const content = (body.content as string).replace("Meal Plan\n\n", "");

const firstSection = content.split("\n\n")[0];

const lines = firstSection.split("\n");

const list = lines

.values()

.filter((line) => !line.includes("- [x] "))

.map((line) => line.replaceAll("- [ ] ", "").trim())

.take(10)

.toArray();

const result = await kv.set(["menu"], list);

return new Response(result.ok ? "OK" : "Error");

});

What I spent time on was making it possible to extract the data. The only workable way I found was to create a Shortcut that I run manually. It took a lot of trial and error to get it working.

Alternatives (like switching to a different notes app with an API) would mean convincing my partner to change apps—a harder engineering problem. I’ll probably try that at some point.

Do you want to build your own E‑Ink dashboard? This blog post is just a brief overview of the process. I have created a deep dive guide with code included here: https://karlssonoskar.gumroad.com/l/eink-dashboard

If you have any questions, any questions at all, just send me an emial to hello@tjoskar.dev